THE PARADOX OF AI GENERATED ATTENTION

In the 1970s, a time when the world wasn't yet at our fingertips, and we didn't casually carry trillions of bytes of content in our pockets, Nobel laureate economist and cognitive psychologist, Herbert A. Simon, delivered a prophetic message: as information becomes abundant, attention becomes the scarce resource.

Today, we can all relate to this feeling of being personally overwhelmed and struggling to wade through the vast sea of content.

However, there's a twist when we consider the role of generative AI in producing content to maximise human attention.

Contrary to Simon's famous quote, "A wealth of information creates a poverty of attention," when it comes to generative AI it's the opposite that is true: more information can lead to greater attention.

To clarify this argument, I'll start by explaining how generative AI works today (at the time of publishing this text) and how the concept of attention underpins its recent success. Then, I’ll delve into how this connects with human attention, and I’ll finalise by suggesting strategies that we can use to maximize it.

Note that my focus in this analysis is on how to use Generative AI to drive human attention, the latter defined as the ability to actively process specific information in the environment while tuning out other details. I am intentionally leaving out other opportunities to use generative AI in marketing that are not directly related to this task.

I’m also stripping a lot of the complexity of the topic with the purpose of leading you through the core thinking and getting to a framework for action.

1. In both humans and AI, attention is dependent on context.

As marketing professionals, artists, politicians, teachers, or even parents, communication is our main tool to create attention.

With the advent of Generative AI, we can now explore novel ways to maximise our efforts. To do so, we must start by understanding the new capabilities of machines and the traditional processes of human beings.

I’ll start by giving a brief overview of how generative AI works through its own mechanisms of attention, which will then guide us in this exploration of how we maximise human attention using AI.

a) In AI, “Attention” is how large language models understand context.

Natural Language Processing (NLP) is what enables Generative AI to understand human direction without the need for code, complex rule-based computer instructions.

NLP is the branch of computer science focusing on teaching machines how to make sense of human-generated data, and how to learn from this data. As new, better NLP systems emerge, anyone can now communicate with computers in our natural language and get the machine to generate text, images, video and audio.

The tipping point of this process has been the recently developed transformer model, based on deep artificial neural networks which are not only able to efficiently recognise individual words, but also to understand them in their context.

That has been a game changer for Generative AI, and what in computer science is called the attention mechanism: when the model considers all words in the text and assesses their relevance in each context. For instance, if we write, "a man on a bank fishing," the AI understands that "bank" refers to a riverbank rather than a financial institution because the words "bank" and "fish" are given the highest weights in the neural network.

When we strip away the layers of complexity in how generative artificial neural networks function, we see that their “understanding” of natural language is often driven by the context.

This ability to effectively process context inputs is evolving rapidly, such as in the recently released Language Learning Model (LLM) called LongLLaMa, based on Meta’s LLaMA, which is pioneering focused transformer training for context scaling. This means that the number of prompts or data input into the system can be much higher without losing accuracy.

As we increase input data, we improve context. The more we improve context, the better the systems become at aiding human users in creating content that maximises consumer attention.

b.) In humans, attention is determined by contextual determinants.

Psychology has identified determinants that affect attention in human beings. These are external factors such as intensity, size, motion, contrast, repetition or novelty of the stimuli, and internal factors like personal interest, emotions, moods, goals, and past experiences, amongst others.

Although this is not news to communication professionals, up until now it has been impossible to factor in all those contextual determinants into the content we create.

But thanks to the new transformer models, all those determinants can be factored into how we use generative AI, which I’ll explain further.

The basic principle is that as we increase the input data into generative AI, we also increase accuracy in the understanding of the context that the audience is in.

2. The more the data, the richer the context, the higher the attention AI can generate.

We have been reliant on the belief that comms professionals must possess a deep understanding of human attention, gained through years of empirical observation, experience, and researched consumer insights. Usually, this understanding is distilled into a culturally relevant truth that drives creative ideas and is a standard requirement for any creative briefing.

With generative AI, we no longer need to be filtering down knowledge into one killer insight. In fact, we shouldn’t. The more data parameters we input, the better the AI will comprehend what is interesting and how to generate the most relevant response to each and all individuals, thus capturing their highest attention.

In summary, the more the information, the richer the context, the more relevant and interesting the content, the higher the attention.

This approach to using the generative AI models to maximise attention through painting the richest picture of context is what I’m calling AI-Generated Attention.

But this is not a single-point solution, as we’ll see below, where I suggest different ways to explore it.

3. A framework for brands to generate attention with Generative AI

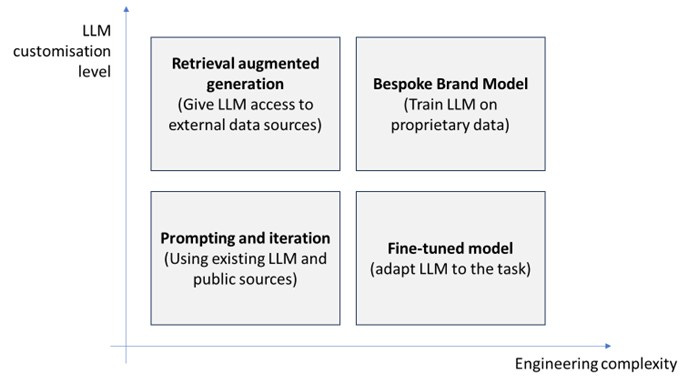

The ability to drive attention will depend on the brand’s approach to it, which I’m simplifying visually into two axes: customisation level and engineering complexity (with a direct correlation to costs and implementation timelines).

The choice of what to do depends on the needs and resources available. To better assess it, I’ll expand on each of these approaches separately.

Prompting and iteration.

In this, the process of AI-generated Attention is empirical and experimental. The LLM will follow instructions based on small amounts of data that it can take in. In this, because we’re just using an external LLM such as chat GPT, we must always assess how and if we input confidential information.

Retrieval augmented generation (RAG).

This is about plugging in external or proprietary audience research data. The advantage is to link relevant data that improves the understanding of our audiences’ context, besides the base knowledge of the LLM.

Note: Prompting and iteration and Retrieval augmented generation can only take in unstructured data such as text, images, audio and video. Tables with data will have to be transformed into conclusions through a supervised learning model, which would take more than 6 months to train and deploy.

Fine-tuned model.

This approach is to adapt existing open-source models to the comms task we have. This is obviously limited by open-source models available to what we require, but one can only anticipate that as time goes by, the number of those will increase dramatically.

Bespoke brand model.

This is where attention becomes truly personal. This is the model that dominates context because it has been trained on proprietary data. To maximise consumer attention, this Generative AI model will be able to factor in external comms characteristics intensity, size, motion, contrast, repetition or novelty of the stimuli, and internal determinants like personal interest, emotions, moods, goals, and past experiences, amongst others. It will continuously learn from consumer interaction and become increasingly better at providing the right answer, to the right person, at the right time.

It's worth clarifying that all these options must respect legal and ethical principles. Even where regulation is still playing catch-up, I recommend looking into the industry advisory bodies, such as the IPA and ISBA’s guiding principles for agencies and advertisers on the use of generative AI in advertising.

Conclusion: Information is Generative AI’s tool for driving human attention.

AI-Generated Attention is when Human attention is maximised by AI systems that understand the human context. The richer the context, the better the generated comms output and the higher the attention level it achieves.

To maximize AI-Generated Attention in our brand comms, we must provide the best possible picture of the context we are in. This approach no longer relies on finding the insight that will lead to the magic solution, but on using a large spectrum of personal and context-rich attention data, which ranges from basic prompting to proprietary data-driven models, illustrated in the matrix above.

The paradox of AI-Generated Attention lies in the amount of data required to maximise human attention. In a world with an increasing wealth of information, AI-generated attention can be harnessed as a valuable resource to stand out and enrich brand-consumer interactions.

It’s the start of a new era where a wealth of information creates a wealth of attention.